Samsung is enhancing the capabilities of AI with the integration of Processing-in-Memory (PIM) in High Bandwidth Memory (HBM) configurations. It means, PIM can process some of the logic functions by integrating an AI engine called the Programmable Computing Unit (PCU) in the memory core.

To solve the exponentially increasing demands that Hyperscale AIs (like ChatGPT) make on traditional memory solutions, Samsung Electronics has integrated an AI-dedicated semiconductor into its high-bandwidth memory (HBM). Called Processing-in-Memory (PIM), our technology allows us to implement processors right into the DRAM, reducing data movement and improving the energy and data efficiency of AI accelerator systems by offloading some of the data calculation work from the processor onto the memory itself.

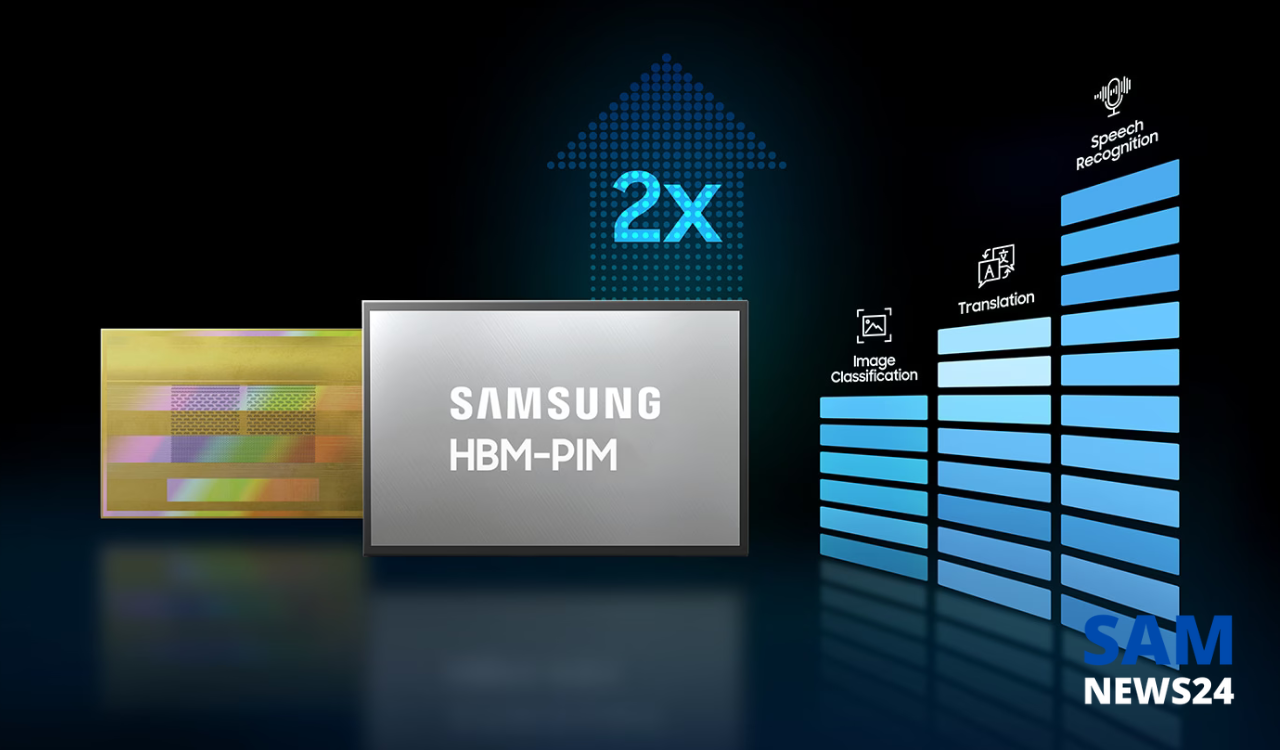

2X performance enhancement

As compared to the previous memory solutions, Samsung’s PIM can improve performance up to 4 times via the Programmable Computing Unit (PCU). But how it increases? So logically multi-core processing in the CPU, the PCU enables parallel processing in memory to enhance performance.

70% less energy

To avoid extra load or power consumption in AI applications PIM helps reduce energy consumption by 70% in systems applied to PIM(Processing-In-Memory), compared to existing HBM.

Easy to adopt and offers expanded applications

As per the core performance, the PIM can be applied without the need to change existing memory ecosystem environments and can be integrated with HBM as well as LPDDR and GDDR memory.

Join Us Now – Telegram, and Google News